[ad_1]

When I asked ChatGPT to name the editor in chief of Spektrum der Wissenschaft, the German-language sister publication of Scientific American, the remedy was, “I have the most current info till 2021, and at that time Dr. Florian Freistetter was the editor-in-main of Spektrum der Wissenschaft. However, it is feasible that the info has improved since then.” This highlights one of the most significant disadvantages of current language-generating synthetic intelligence programs: they “hallucinate.” When Spektrum der Wissenschaft features a whole lot of do the job by Freistetter, he was by no means a personnel member—let by yourself editor in chief—of Spektrum der Wissenschaft. That is why it is essential to perform on so-termed explainable AI (XAI) styles that can justify their answers—and thus develop into far more transparent.

Most AI courses function like a “black box.” “We know particularly what a design does but not why it has now particularly acknowledged that a picture exhibits a cat,” stated personal computer scientist Kristian Kersting of the Technological College of Darmstadt in Germany to the German-language newspaper Handelsblatt. That predicament prompted Kersting—along with laptop or computer scientists Patrick Schramowski of the Technical College of Darmstadt and Björn Deiseroth, Mayukh Deb and Samuel Weinbach, all at the Heidelberg, Germany–based AI enterprise Aleph Alpha—to introduce an algorithm named AtMan before this 12 months. AtMan enables substantial AI devices such as ChatGPT, Dall-E and Midjourney to lastly demonstrate their outputs.

In mid-April 2023 Aleph Alpha integrated AtMan into its possess language product Luminous, letting the AI to reason about its output. These who want to attempt their hand at it can use the Luminous playground for absolutely free for jobs this sort of as summarizing textual content or completing an input. For case in point, “I like to take in my burger with” is followed by the solution “fries and salad.” Then, thanks to AtMan, it is achievable to figure out which enter terms led to the output: in this circumstance, “burger” and “favorite.”

AtMan’s explanatory energy is minimal to the enter info, even so. It can in fact make clear that the words “burger” and “like” most strongly led Luminous to comprehensive the enter with “fries and salad.” But it are unable to explanation how Luminous is aware that burgers are frequently consumed with fries and salad. This expertise continues to be in the info with which the model was educated.

AtMan also simply cannot debunk all of the lies (the so-referred to as hallucinations) told by AI systems—such as that Florian Freistetter is my boss. Nonetheless, the skill to make clear AI reasoning from input knowledge gives monumental pros. For example, it is attainable to promptly check out no matter whether an AI-produced summary is correct—and to make certain the AI has not included anything. These kinds of an potential also performs an crucial position from an moral viewpoint. “If a financial institution utilizes an algorithm to determine a person’s creditworthiness, for instance, it is possible to check out which particular facts led to the end result: Did the AI use discriminatory properties this sort of as skin shade, gender, and so on?” states Deiseroth, who co-made AtMan.

Also AtMan is not restricted to pure language models. It can also be utilized to study the output of AI courses that make or course of action images. This applies not only to systems this sort of as Dall-E but also to algorithms that evaluate medical scans in purchase to diagnose numerous conditions. Such a ability will make an AI-produced prognosis additional comprehensible. Medical professionals could even learn from the AI if it were being to understand patterns that previously eluded individuals.

AI Algorithms Are a “Black Box”

“AI programs are staying designed particularly swiftly and from time to time built-in into products far too early,” says Schramowski, who was also concerned in the enhancement of AtMan. “It’s important that we fully grasp how an AI arrives at a summary so that we can enhance it.” That’s simply because algorithms are continue to a “black box”: although scientists recognize how they usually operate, it is typically unclear why a distinct output follows a distinct enter. Even worse, if the exact same input is operate through a design various instances in a row, the output can differ. The reason for this is the way AI programs perform.

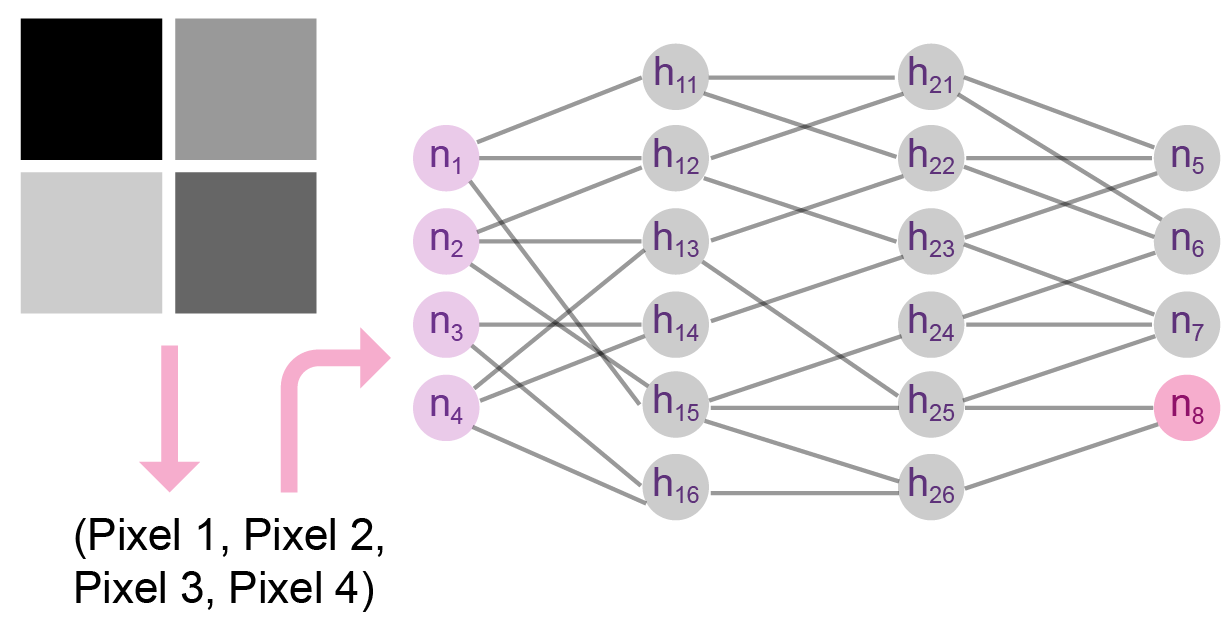

Modern-day AI systems—such as language versions, device translation courses or impression-creating algorithms—are built from neural networks. The construction of these networks is primarily based on the visual cortex of the mind, in which person cells known as neurons go signals to just one an additional by using connections named synapses. In the neural network, computing units act as the “neurons,” and they are developed up in quite a few levels, one particular following the other. As in the mind, the connections in between the mechanical neurons are termed “synapses,” and every single a person is assigned a numerical worth referred to as its “weight.”

If, for case in point, a consumer needs to go an graphic to these types of a program, the visible is initial transformed into a list of figures wherever each individual pixel corresponds to an entry. The neurons of the initial layer then acknowledge these numerical values.

Upcoming, the info pass by the neural network layer by layer: the worth of the neuron in one layer is multiplied by fat of the synapse and transferred to the neuron from the up coming layer. If necessary, the consequence there ought to be added to the values of other synapses that conclude at the similar neuron. As a result, the program procedures the first enter layer by layer right up until the neurons of the last layer give an output—for case in point, regardless of whether there is a cat, dog or seagull in the image.

But how do you make positive that a community procedures the enter information in a way that makes a significant result? For this, the weights—the numerical values of the synapses—must be calibrated appropriately. If they are set correctly, the application can explain a extensive wide variety of visuals. You do not configure the weights your self rather you issue the AI to schooling so that it finds values that are as appropriate as probable.

This will work as follows: The neural community starts off with a random selection of weights. Then the system is offered with tens of thousands or hundreds of thousands of sample illustrations or photos, all with corresponding labels this sort of as “seagull,” “cat” and “dog.” The community procedures the very first graphic and provides an output that it compares to the offered description. If the final result differs from the template (which is most very likely the scenario in the starting), the so-referred to as backpropagation kicks in. This suggests the algorithm moves backward by the community, tracking which weights noticeably affected the result—and modifying them. The algorithm repeats this combination of processing, checking and weight adjustment with all training data. If the training is productive, the algorithm is then equipped to effectively describe even previously unseen illustrations or photos.

Two Techniques for Comprehension AI Outcomes

Often, nevertheless, it is not simply the AI’s reply that is interesting but also what details led it to its judgment. For instance, in the professional medical field, 1 would like to know why a plan believes it has detected indicators of a sickness in a scan. To discover out, one particular could of system glimpse into the supply code of the trained design alone mainly because it consists of all the data. But present day neural networks have hundreds of billions of parameters—so it is difficult to keep monitor of all of them.

However, ways exist to make an AI’s outcomes much more clear. There are numerous unique strategies. 1 is backpropagation. As in the training process, one particular traces back how the output was produced from the input knowledge. To do this, a person ought to backtrack the “synapses” in the network with the optimum weights and can hence infer the primary enter info that most motivated the result.

Another technique is to use a perturbation model, in which human testers can improve the input details slightly and notice how this variations the AI’s output. This would make it possible to master which input info influenced the end result most.

These two XAI solutions have been broadly used. But they are unsuccessful with large AI models this kind of as ChatGPT, Dall-E or Luminous, which have numerous billion parameters. Backpropagation, for example, lacks the vital memory: If the XAI traverses the network backward, a single would have to retain a history of the a lot of billions of parameters. Whilst education an AI in a massive facts middle, this is possible—but the exact system cannot be repeated regularly to examine an input.

In the perturbation design the limiting factor is not memory but instead computing electricity. If a single wishes to know, for illustration, which space of an picture was decisive for an AI’s response, a person would have to vary each and every pixel separately and create a new output from it in each and every occasion. This requires a lot of time, as perfectly as computing power that is not accessible in practice.

To establish AtMan, Kersting’s staff effectively tailored the perturbation model for significant AI programs so that the vital computing ability remained manageable. Compared with common algorithms, AtMan does not vary the input values specifically but modifies the details that is presently a handful of layers deeper in the network. This saves significant computing actions.

An Explainable AI for Transformer Products

To fully grasp how this performs, you need to have to know how AI designs these types of as ChatGPT operate. These are a particular sort of neural community, referred to as transformer networks. They were being at first designed to method all-natural language, but they are now also utilized in image technology and recognition.

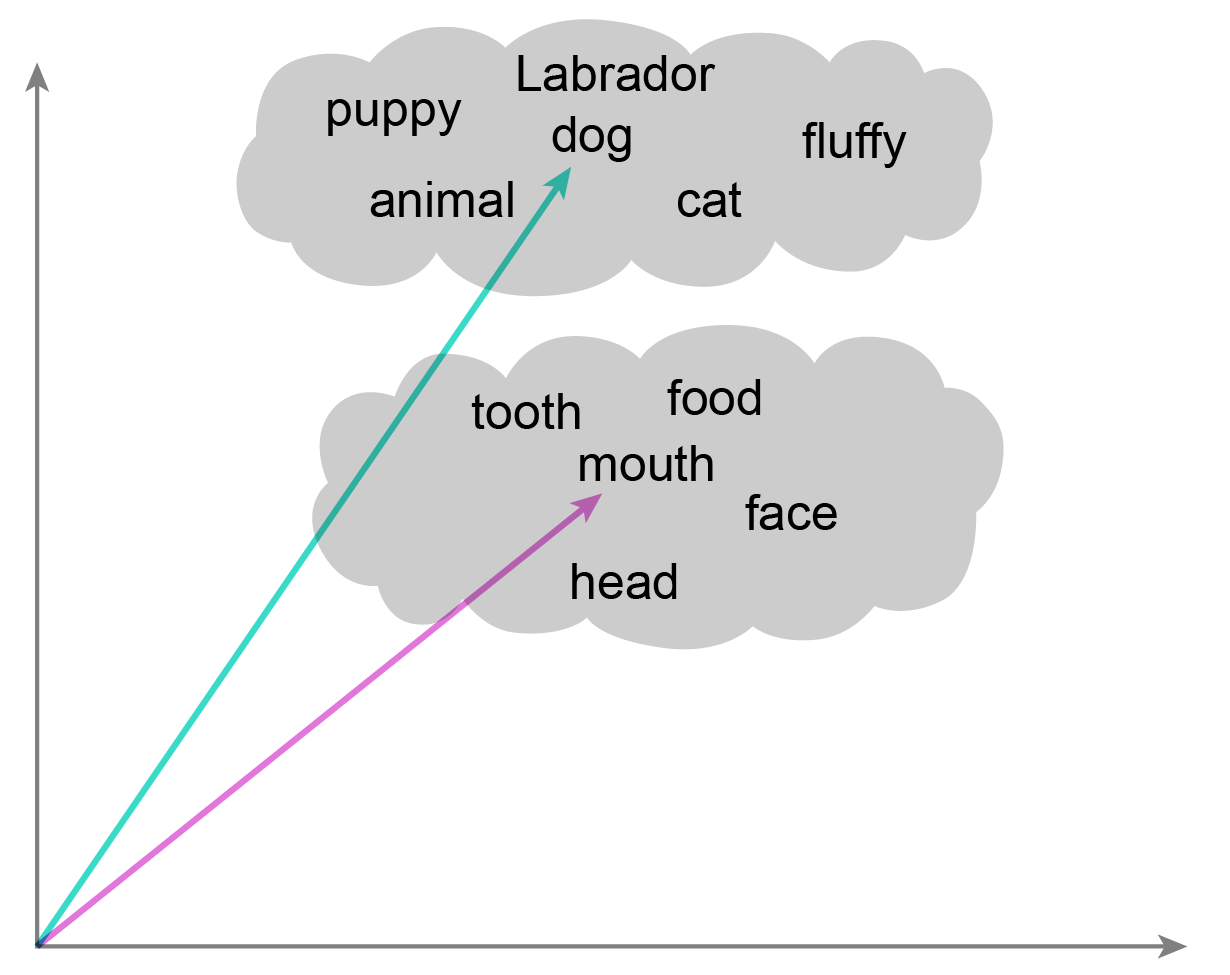

The most hard activity in processing speech is to change words into acceptable mathematical representations. For illustrations or photos, this move is easy: transform them into a prolonged listing of pixel values. If the entries of two lists are near to each other, then they also correspond to visually similar visuals. A equivalent treatment must be located for terms: semantically equivalent terms these kinds of as “house” and “cottage” should really have a identical illustration, even though equally spelled phrases with distinct meanings, this sort of as “house” and “mouse,” need to be more aside in their mathematical kind.

Transformers can master this demanding endeavor: they convert words into a notably suited mathematical representation. This needs a large amount of operate, even so. Builders have to feed the community a quantity of texts so that it learns which words and phrases seem in related environments and are hence semantically related.

It is All about Focus

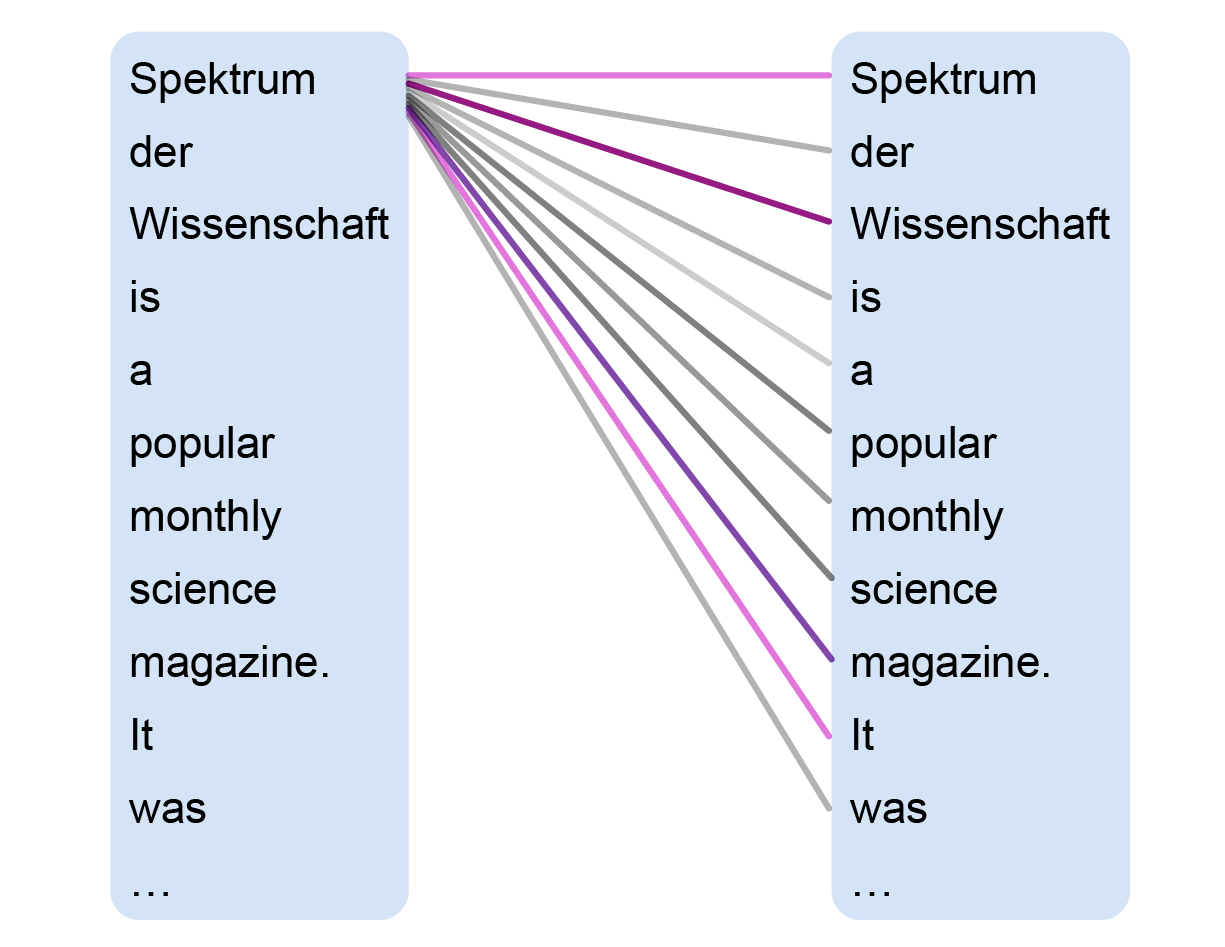

But that by itself is not ample. You also have to make sure that the AI understands a extended input just after training. For case in point, acquire the 1st strains of the German-language Wikipedia entry on Spektrum der Wissenschaft. They translate roughly to “Spektrum der Wissenschaft is a well-liked regular monthly science magazine. It was established in 1978 as a German-language version of Scientific American, which has been printed in the U.S. because 1845, but around time has taken on an more and more independent character from the U.S. primary.” How does the language model know what “U.S.” and “original” refer to in the second sentence? In the previous, most neural networks failed at this kind of tasks—that is, till 2017, when specialists at Google Brain launched a new sort of network architecture primarily based entirely on the so-called interest mechanism, the main of transformer networks.

Consideration permits AI styles to recognize the most essential data in an enter: Which words and phrases are associated? What articles is most suitable to the output? As a result, an AI design is equipped to identify references concerning terms that are considerably apart in the text. To do this, notice usually takes each term in a sentence and relates it to every other phrase. So for the sentence in the illustration from Wikipedia, the product starts off with “Spektrum” and compares it to all the other words in the entry, such as “is,” “science,” and so on. This approach makes it possible for a new mathematical representation of the enter words and phrases to be found—and just one that requires into account the articles of the sentence. This interest move occurs both of those all through schooling and in procedure when customers style something.

This is how language models this kind of as ChatGPT or Luminous are in a position to method an input and make a response from it. By pinpointing what articles to fork out interest to, the method can estimate which terms are most possible to adhere to the enter.

Shifting the Focus in a Focused Fashion

This awareness system can be used to make language products far more clear. AtMan, named immediately after the thought of “attention manipulation,” particularly manipulates how substantially attention an AI pays to particular enter text. It can direct interest toward certain content material and absent from other material. This makes it achievable to see which sections of the input have been essential for the output—without consuming much too a lot computing energy.

For occasion, researchers can pass the pursuing textual content to a language model: “Hello, my title is Lucas. I like soccer and math. I have been performing on … for the earlier several a long time.” The product initially finished this sentence by filling in the blank with “my degree in personal computer science.” When the researchers told the product to enhance its attention to “soccer,” the output altered to “the soccer area.” When they amplified notice to “math,” they acquired “math and science.”

As a result, AtMan signifies an essential progress in the industry of XAI and can convey us closer to knowing AI devices. But it even now does not conserve language designs from wild hallucination—and it can’t describe why ChatGPT believes that Florian Freistetter is editor in main of Spektrum der Wissenschaft.

It can at the very least be utilised to regulate what information the AI does and does not choose into account, nevertheless. “This is vital, for example, in algorithms that evaluate a person’s creditworthiness,” Schramowski points out. “If a plan bases its effects on sensitive information these kinds of as a person’s pores and skin shade, gender or origin, you can exclusively change off the emphasis on that.” AtMan can also elevate queries if it reveals that an AI program’s output is minimally motivated by the content material handed to it. In that circumstance, the AI has obviously scooped all its created articles from the instruction knowledge. “You ought to then examine the results totally,” Schramowski suggests.

AtMan can procedure not only textual content data in this way but any type of data that a transformer product will work with. For example, the algorithm can be mixed with an AI that supplies descriptions of photos. This can be employed to come across out which locations of an picture led to the description delivered. In their publication, the researchers seemed at a photograph of a panda—and located the AI primarily based its description of “panda” mostly on the animal’s facial area.

“And it would seem like AtMan can do even a lot more,” claims Deiseroth, who also assisted acquire the algorithm. “You could use the explanations from AtMan precisely to increase AI versions.” Previous function has now demonstrated that more compact AI devices produce greater final results when qualified to provide fantastic reasoning. Now it remains to be investigated no matter whether the similar is accurate for AtMan and massive transformer models. “But we however have to have to verify that,” Deiseroth suggests.

This post initially appeared in Spektrum der Wissenschaft and was reproduced with permission.

[ad_2]

Resource website link