[ad_1]

In the coronary heart of Kyoto, Japan, sits the more than 400-12 months-aged Kodai-ji Temple, graced with ornate cherry blossoms, classic maki-e art—and now a robot priest produced from aluminum and silicone.

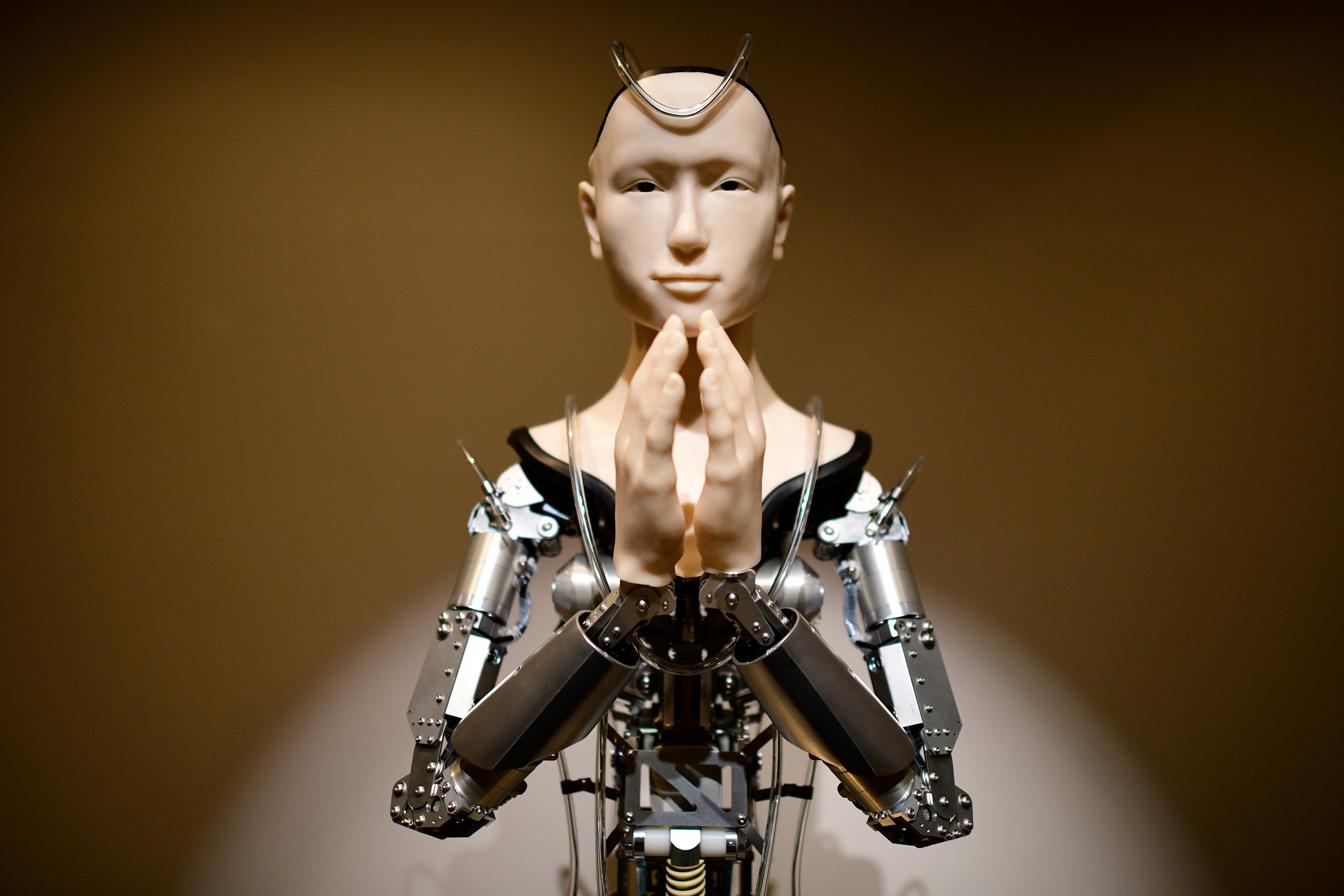

“Mindar,” a robot priest made to resemble the Buddhist goddess of mercy, is part of a growing robotic workforce that is exacerbating work insecurity across industries. Robots have even infiltrated fields that when seemed immune to automation this kind of as journalism and psychotherapy. Now men and women are debating whether or not robots and artificial intelligence programs can switch priests and monks. Would it be naive to assume these occupations are secure? Is there everything robots simply cannot do?

We feel that some work will in no way succumb to robot overlords. Decades of researching the psychology of automation has have demonstrated us that these equipment still absence one particular top quality: believability. And without it, Mindar and other robot priests will in no way outperform humans. Engineers seldom assume about credibility when they structure robots, but it could determine which work opportunities cannot be productively automatic. The implications lengthen significantly over and above faith.

What is believability, and why is it so crucial? Reliability is a counterpart to ability. Ability describes whether or not you can do a little something, whilst trustworthiness describes whether individuals rely on you to do a little something. Credibility is one’s status as an authentic source of data.

Scientific research clearly show that earning reliability requires behaving in a way that would be greatly high-priced or irrational if you did not definitely keep your beliefs. When Greta Thunberg traveled to the 2019 United Nations Weather Action Summit by boat, she signaled an reliable belief that men and women have to have to act straight away to suppress weather alter. Spiritual leaders display credibility by means of pilgrimage and celibacy, methods that would not make sense if they did not authentically hold their beliefs. When spiritual leaders get rid of trustworthiness, as in the wake of the Catholic Church’s sexual abuse scandals, religious institutions lose funds and followers.

Robots are very able, but they may well not be credible. Reports display that credibility necessitates authentic beliefs and sacrifice on behalf of these beliefs. Robots can preach sermons and create political speeches, but they do not authentically comprehend the beliefs they express. Nor can robots actually have interaction in high priced conduct such as celibacy since they do not really feel the price.

Above the previous two a long time we have partnered with places of worship, including Kodai-ji Temple, to exam regardless of whether this absence of believability would hurt spiritual institutions that employ robotic monks. Our speculation was not a foregone summary. Mindar and other robotic clergymen are spectacles, with wall-to-wall movie screens and immersive seem outcomes. Mindar swivels through its sermons to make eye get in touch with with its viewers although its palms are clasped together in prayer. Readers have flocked to encounter these sermons. Even with these unique consequences, even so, we doubted no matter if robotic priests could certainly encourage folks to come to feel dedicated to their religion and their spiritual institutions.

We performed a few linked scientific tests, which are described in a not too long ago published paper. In our initial study, we recruited persons as they still left Kodai-ji. Some experienced witnessed Mindar, and some experienced not. We also asked men and women about the trustworthiness of both of those Mindar, and the human monks who do the job at the temple, and then gave them an opportunity to donate to the temple. We identified that folks rated Mindar as a lot less credible than the human monks who perform at Kodai-ji. We also located that people today who noticed the robotic were 12 % less most likely to donate revenue to the temple (68 per cent) than those who had visited the temple but did not observe Mindar (80 percent).

We then replicated this finding 2 times in our paper: In a follow-up examine, we randomly assigned Taoists to view possibly a human or a robot deliver a passage from the Tao Te Ching. In a 3rd study, we calculated Christians’ subjective spiritual dedication following they go through a sermon that we advised them was composed by possibly a human or a chatbot. In each research, people today rated robots as much less credible than individuals, and they expressed fewer motivation to their religious identity right after a robotic-delivered sermon, as opposed with a human-shipped one particular. Contributors who noticed the robotic supply the Tao Te Ching sermon had been also 12 percent a lot less possible to circulate a flyer marketing the temple (18 percent) than individuals who watched the human priest (30 p.c).

We imagine that these scientific studies have implications past religion and foreshadow the limitations of automation. Current conversations about the long term of automation have concentrated on abilities. The site Will Robots Choose My Job? employs info on robotic capabilities to estimate the likelihood that any work will be automatic soon. In a the latest editorial, directors at Singapore Administration University argued that the very best path to employment in the age of AI was to cultivate “distinctively human capabilities.”

Focusing entirely on capacity, even so, might be blinding us to areas the place robots are underperforming since they are not credible.

This robotic reliability penalty is at the moment staying felt in domains ranging from journalism to health treatment, regulation, the armed forces and self-driving autos. Robots can capably execute activities in these domains. But they fail to inspire believe in, which is vital. People are much less probably to think news headlines manufactured by AI, and they do not want equipment generating moral choices about who life and who dies in drone strikes or auto collisions.

Instruction may well also go through from the trustworthiness penalty. The Khan Academy, which publishes on-line instruments for college student education and learning, recently launched a generative AI, known as Khanmigo, that offers developmental responses rather than straight solutions to pupils. But will these packages operate?

Just like the pious need to have a leader who has sacrificed for their beliefs, college students want position types who authentically treatment about what they train. They want skilled and credible instructors. Automating education and learning could for that reason further widen schooling inequality. Whilst college students from wealthy backgrounds will have access to human lecturers with AI guidance, these from poorer backgrounds may stop up in classrooms instructed entirely by AI.

Politics and social activism are other destinations in which trustworthiness issues. Picture robots making an attempt to deliver both Abraham Lincoln’s Gettysburg Deal with or Martin Luther King’s “I Have a Dream” speech. These speeches were so effective since they have been imbued with their authors’ authentic pain and like.

Employment necessitating credibility will run considerably a lot more effectively if they obtain a way to complement AI capabilities with human trustworthiness relatively than switch human workers. Individuals might not trust robot journalists and instructors, but they will trust individuals in these roles who use AI for support.

Correctly forecasting the potential of work entails recognizing the occupations that will need a human contact and protecting human employees in those areas. In the rush to progress AI technology, we should try to remember that there are some issues robots can’t do.

This is an impression and assessment report, and the sights expressed by the creator or authors are not always those of Scientific American.

[ad_2]

Supply hyperlink