[ad_1]

Inside the orb, the entire world is diminished to a sphere of white light-weight and flashes. Exterior the orb’s metallic, skeletal body is darkness. Visualize you are strapped into a chair inside of this contraption. A voice from the darkness indicates expressions: approaches to pose your mouth and eyebrows, eventualities to respond to, phrases to say and thoughts to embody. At irregular intervals, the voice also tells you not to worry and warns that additional flashes are coming soon.

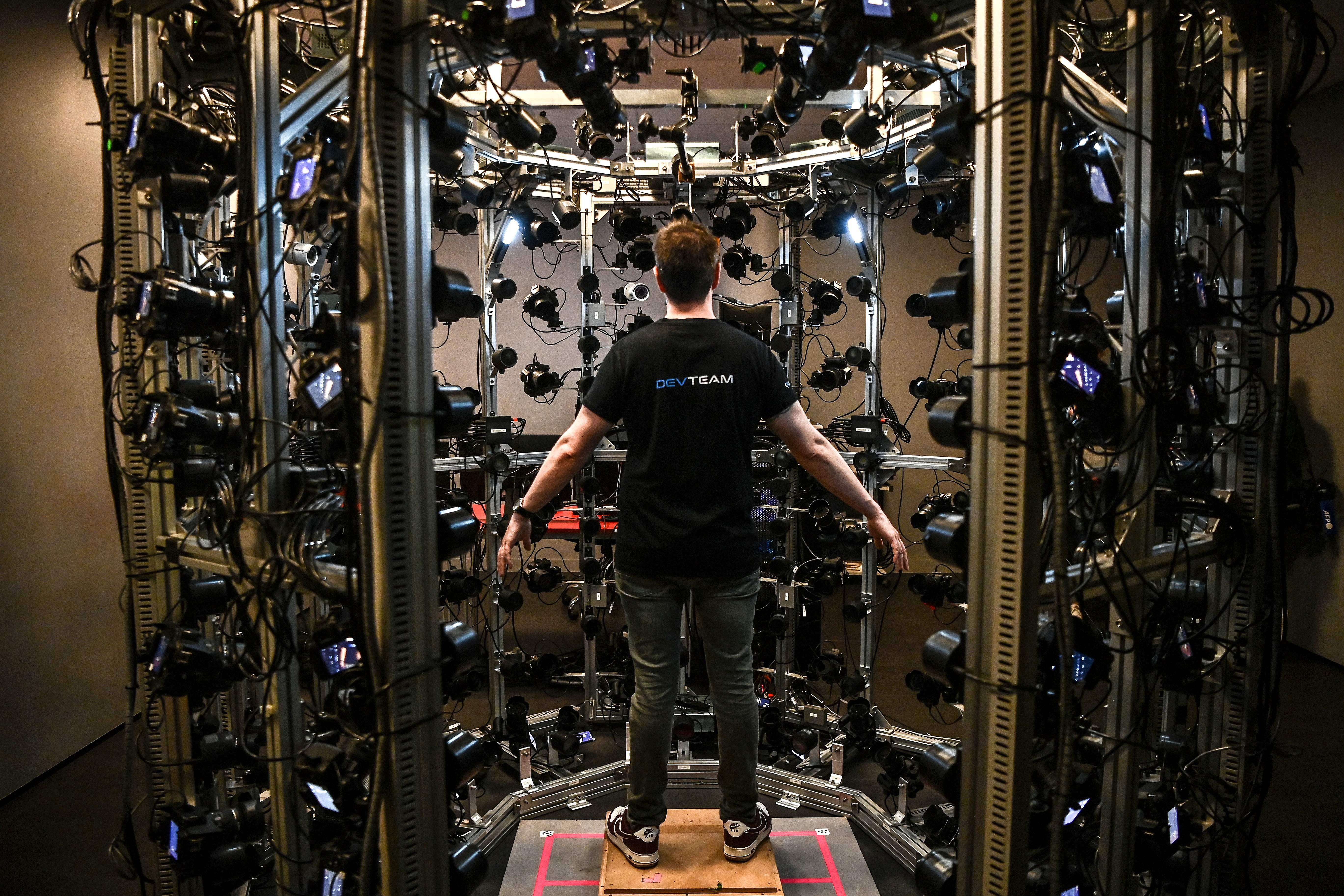

“I don’t imagine I was freaked out, but it was a pretty frustrating house,” claims an actor who questioned Scientific American to withhold his title for privateness good reasons. He’s describing his encounter with “the orb,” his term for the photogrammetry booth utilized to capture his likeness for the duration of the manufacturing of a major video clip activity in 2022. “It felt like getting in [a magnetic resonance imaging machine],” he says. “It was really pretty sci-fi.” This actor’s experience was part of the scanning process that lets media production studios to get photographs of cast members in numerous positions and produce movable, malleable digital avatars that can subsequently be animated to perform practically any motion or movement in a realistic online video sequence.

Improvements in artificial intelligence are now building it steadily much easier to make digital doubles like this—even with no an intense session in the orb. Some actors panic a possible long run in which studios will strain them to indication away their likeness and their digital double will take work away from them. This is a person of the variables motivating users of the union SAG-AFTRA (the Display Actors Guild–American Federation of Television and Radio Artists) to go on strike. “Performers need to have the protection of our photos and performances to avert substitution of human performances by synthetic intelligence technological innovation,” the union stated in a assertion unveiled a couple days immediately after the strike was introduced in mid-July.

Whilst AI replacement is an unsettling likelihood, the electronic doubles noticed in today’s media productions nevertheless rely on human performers and unique consequences artists. Here’s how the engineering works—and how AI is shaking up the recognized approach.

How Digital Double Tech Functions

Around the past 25 decades or so, it has turn out to be progressively frequent for huge-price range media productions to make digital doubles of at the very least some performers’ facial area and body. This know-how almost certainly performs a part in any movie, Tv show or online video video game that involves extensive electronic outcomes, elaborate motion scenes or an actor’s portrayal of a character at a number of ages. “It’s become type of business standard,” claims Chris MacLean, visible results supervisor for the Apple Television set clearly show Basis.*

The photogrammetry booth is an location surrounded by hundreds of cameras, in some cases arranged in an orb condition and from time to time about a square room. The cameras capture 1000’s of deliberately overlapping two-dimensional photos of a person’s deal with at a higher resolution. If an actor’s function requires speaking or demonstrating emotion, images of many diverse facial movements are desired. For that motive, starring performers demand a lot more substantial scans than secondary or track record solid associates. Similarly, more substantial setups are employed to scan bodies.

With those people knowledge, visible effects (VFX) artists get the model from two-dimensional to three-dimensional. The overlap of the photographs is crucial. Primarily based on camera coordinates—and these redundant overlapping sections—the illustrations or photos are mapped and folded in relation to a person another in a method akin to digital origami. Artists can then rig the ensuing 3-D electronic double to a virtual “skeleton” and animate it—either by directly subsequent an actor’s genuine-globe, motion-captured general performance or by combining that general performance with a computer-produced collection of movements. The animated determine can then be put in a digital landscape and specified dialogue—technically, it is probable to use a person’s scans to generate photorealistic video clip footage of them accomplishing and stating points that actor by no means did or said.

Specific consequences artists can also implement an actor’s digital effectiveness to a virtual avatar that seems entirely distinct from the human man or woman. For instance, the aforementioned video clip sport actor claims he designed faces in the orb and recorded his strains in a recording booth. He also bodily acted out lots of scenes in a independent studio with his fellow performers for movement seize, a system identical to photogrammetry but designed to history the body’s actions. When players have interaction with the remaining merchandise, nevertheless, they won’t see this actor on-display screen. In its place his electronic double was modified to look like a villain with a specific visual appeal. The ultimate animated character thus manifested both of those the actor’s get the job done and the movie activity character’s traits.

Film and tv productions have used this system for many years, even though it has traditionally been both of those labor intensive and high priced. In spite of the issues, digital doubles are widespread. Output teams commonly use them to make smaller changes that include dialogue and action. The tech is also used for bigger edits, these as taking a team of 100 track record actors and morphing and duplicating them into a electronic crowd of 1000’s. But it’s easier to accomplish such feats in a convincing way if the initial footage is near to the wished-for remaining output. For instance, a qualifications actor scanned carrying a costume meant to replicate garments worn in 19th-century Europe would be hard to edit into a dystopian upcoming in which their digital double wears a room go well with, MacLean suggests. “I really do not believe there’s any way that the studios would have that much endurance,” he provides.

Yet generative synthetic intelligence, the same type of machine-studying know-how guiding ChatGPT, is commencing to make aspects of the electronic double approach more quickly and easier.

AI Swoops In

Some VFX providers are currently utilizing generative AI to pace up the approach of modifying a electronic double’s appearance, MacLean notes. This makes it a lot easier to “de-age” a famous actor in films these kinds of as Indiana Jones and the Dial of Future, which involves a flashback with a younger-searching version of now 81-yr-outdated Harrison Ford. AI also arrives in useful for encounter replacement, in which an actor’s likeness is superimposed about a stunt double (basically a sanctioned deepfake), in accordance to Vladimir Galat, main technology officer of the Scan Truck, a cell photogrammetry business.

Galat claims developments in AI have built some photogrammetry scans avoidable: A generative design can be skilled on present pictures and footage—even of somebody no more time dwelling. Digital Domain, a VFX generation firm that labored on Avengers: Endgame, claims it is also feasible to produce faux electronic performances by historical figures. “This is a extremely new technological know-how but a developing part of our organization,” suggests Hanno Basse, Digital Domain’s chief tech officer.

So considerably dwelling people have nevertheless been involved in crafting performances “by” the deceased. A true-phrase actor performs a scene, and then results artists replace their experience with that of the historic human being. “We truly feel the nuances of an actor’s efficiency, in blend with our AI and device learning tool sets, is essential to acquiring photorealistic benefits that can captivate an audience and cross the uncanny valley,” Basse states, referring to the eerie sensation often triggered by one thing that seems to be almost—but not quite—human.

Fears of Robot Substitute

There’s a big difference concerning changing a electronic double and replacing a person’s general performance solely with AI, states laptop engineer Jeong Joon “JJ” Park, who currently researches personal computer eyesight and graphics at Stanford College and will be starting a situation at the College of Michigan this tumble. The uncanny valley is huge, and there is not still a generative AI product that can create a finish, photorealistic, transferring scene from scratch—that technological innovation is not even close, Park notes. To get there, “there requires to be a major leap in the intelligence that we’re building,” he states. (AI-produced pictures might be tricky to explain to from the real factor, but crafting sensible continue to images is much a lot easier than producing movie meant to depict 3-D room.)

However, the danger of abuse of actors’ likeness looms. If 1 person’s face can be conveniently swapped about another’s, then what’s to quit filmmakers from putting Tom Cruise in every shot of every action motion picture? What will prevent studios from replacing 100 qualifications actors with just just one and using AI to build the illusion of many? A patchwork of state regulations indicates that, in most locations, men and women have authorized ownership more than their personal likeness, suggests Eleanor Lackman, a copyright and trademark lawyer. But she notes that there are wide exceptions for inventive and expressive use, and filmmaking could quickly fall under that designation. And regardless of the regulation, a person could legally indicator a deal giving their individual likeness legal rights above to a production company, explains Jonathan Blavin, a lawyer specializing in media and tech. When it arrives to preserving one’s electronic likeness, it all comes down to the details of the contract—a circumstance SAG-AFTRA is effectively aware of.

The actor who played the movie game villain felt comfy currently being scanned for his role past 12 months. “The firm I worked with was rather aboveboard,” he says. But in the potential, he may not be so speedy to enter agreements. “The capabilities of what AI can do with face seize, and what we noticed from the [prestrike negotiations], is frightening,” he states. The actor loves movie games he was enthusiastic to act in one and he hopes to do so all over again. But very first, he states, “I would double-check the paperwork, check in with my agency—and possibly a lawyer.”

*Editor’s Observe (7/25/23): This sentence was edited right after publishing to clarify Chris MacLean’s placement at the Apple Television show Foundation.

[ad_2]

Source link