[ad_1]

Scientists hope brain implants will 1 working day help persons who have misplaced the means to discuss to get their voice back—and it’s possible even to sing. Now, for the very first time, experts have demonstrated that the brain’s electrical action can be decoded and applied to reconstruct tunes.

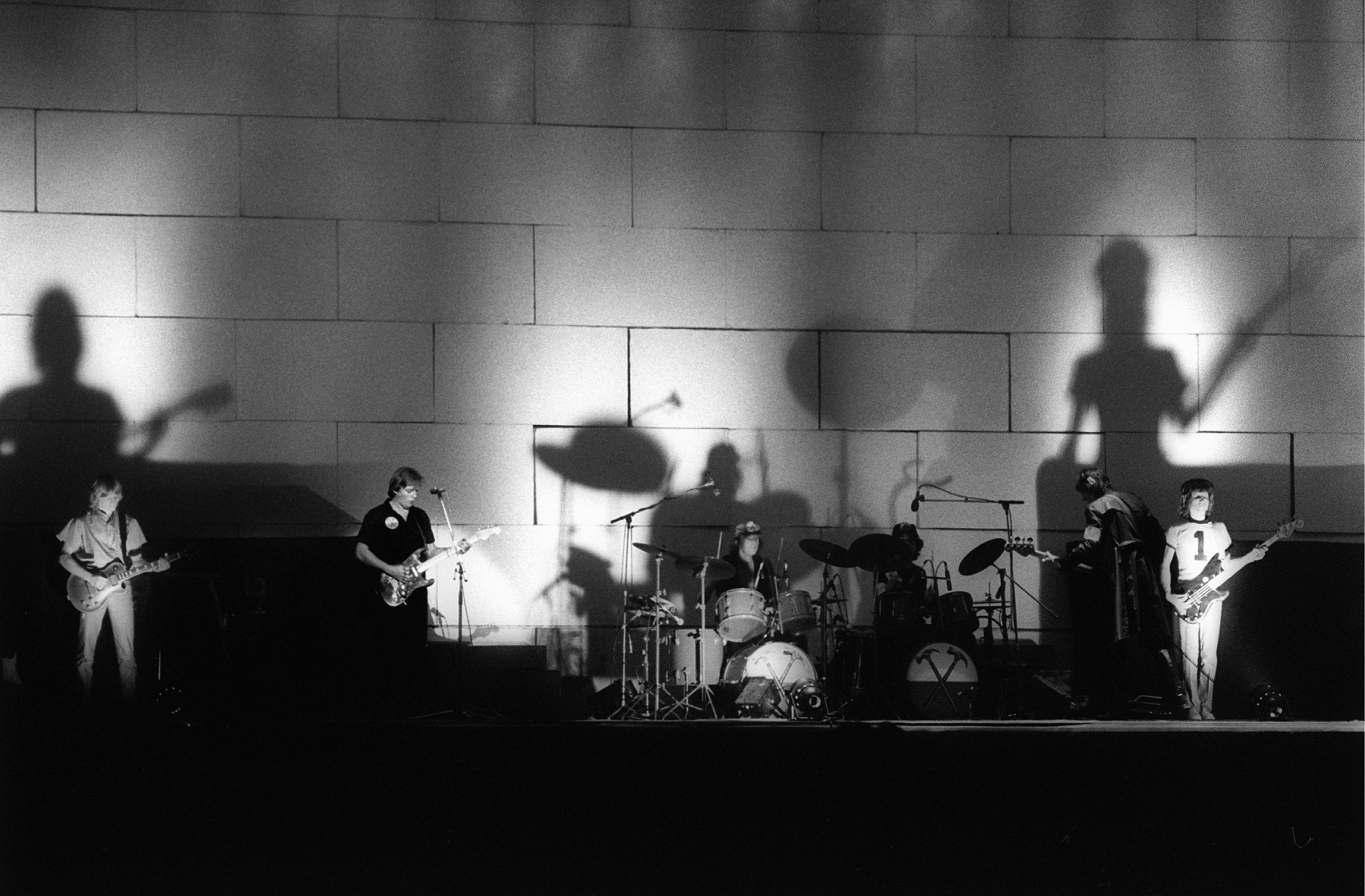

A new examine analyzed details from 29 individuals who were presently becoming monitored for epileptic seizures utilizing postage-stamp-dimension arrays of electrodes that have been placed immediately on the surface area of their mind. As the individuals listened to Pink Floyd’s 1979 song “Yet another Brick in the Wall, Section 1,” the electrodes captured the electrical action of a number of mind areas attuned to musical factors these types of as tone, rhythm, harmony and lyrics. Using device finding out, the researchers reconstructed garbled but distinctive audio of what the participants were hearing. The analyze effects have been posted on Tuesday in PLOS Biology.

Neuroscientists have labored for many years to decode what people today are looking at, hearing or considering from brain activity by yourself. In 2012 a group that included the new study’s senior author—cognitive neuroscientist Robert Knight of the University of California, Berkeley—became the initial to successfully reconstruct audio recordings of text individuals listened to when donning implanted electrodes. Many others have considering the fact that employed identical procedures to reproduce a short while ago seen or imagined photos from participants’ brain scans, together with human faces and landscape photographs. But the the latest PLOS Biology paper by Knight and his colleagues is the initial to propose that researchers can eavesdrop on the mind to synthesize songs.

“These exciting findings establish on preceding do the job to reconstruct plain speech from brain activity,” states Shailee Jain, a neuroscientist at the College of California, San Francisco, who was not associated in the new review. “Now we’re able to seriously dig into the brain to unearth the sustenance of seem.”

To turn mind exercise data into musical sound in the examine, the scientists trained an synthetic intelligence product to decipher details captured from countless numbers of electrodes that were connected to the individuals as they listened to the Pink Floyd track while going through operation.

Why did the team choose Pink Floyd—and specifically “Another Brick in the Wall, Component 1”? “The scientific cause, which we mention in the paper, is that the track is pretty layered. It provides in elaborate chords, various devices and various rhythms that make it attention-grabbing to examine,” suggests Ludovic Bellier, a cognitive neuroscientist and the study’s lead author. “The significantly less scientific motive could be that we just genuinely like Pink Floyd.”

The AI design analyzed patterns in the brain’s reaction to many elements of the song’s acoustic profile, choosing aside improvements in pitch, rhythm and tone. Then a different AI model reassembled this disentangled composition to estimate the seems that the clients listened to. The moment the mind information were fed through the model, the tunes returned. Its melody was around intact, and its lyrics had been garbled but discernible if 1 understood what to hear for: “All in all, it was just a brick in the wall.”

The product also unveiled which sections of the mind responded to various musical functions of the tune. The scientists uncovered that some parts of the brain’s audio processing center—located in the exceptional temporal gyrus, just at the rear of and earlier mentioned the ear—respond to the onset of a voice or a synthesizer, though other areas groove to sustained hums.

While the results focused on songs, the scientists count on their outcomes to be most valuable for translating brain waves into human speech. No make a difference the language, speech incorporates melodic nuances, which includes tempo, worry, accents and intonation. “These aspects, which we contact prosody, have which means that we just can’t connect with words alone,” Bellier says. He hopes the design will boost brain-computer interfaces, assistive devices that document speech-associated mind waves and use algorithms to reconstruct meant messages. This technologies, continue to in its infancy, could aid individuals who have lost the capacity to speak because of situations such as stroke or paralysis.

Jain says upcoming study ought to examine no matter if these styles can be expanded from audio that individuals have read to imagined interior speech. “I’m hopeful that these findings would translate mainly because very similar mind regions are engaged when folks envision talking a word, in contrast with physically vocalizing that phrase,” she states. If a mind-laptop interface could re-create someone’s speech with the inherent prosody and emotional weight observed in music, it could reconstruct significantly extra than just phrases. “Instead of robotically saying, ‘I. Really like. You,’ you can yell, ‘I appreciate you!’” Knight suggests.

Many hurdles stay right before we can place this technological innovation in the hands—or brains—of individuals. For a single factor, the model depends on electrical recordings taken straight from the surface area of the brain. As brain recording tactics improve, it may be feasible to assemble these information devoid of surgical implants—perhaps applying ultrasensitive electrodes attached to the scalp in its place. The latter know-how can be used to identify one letters that individuals visualize in their head, but the approach will take about 20 seconds per letter—nowhere in close proximity to the pace of all-natural speech, which hurries by at around 125 phrases for each minute.

The scientists hope to make the garbled playback crisper and additional comprehensible by packing the electrodes nearer collectively on the brain’s surface area, enabling an even a lot more specific search at the electrical symphony the brain creates. Previous year a staff at the University of California, San Diego, made a densely packed electrode grid that provides brain-signal data at a resolution that is 100 times greater than that of latest equipment. “Today we reconstructed a tune,” Knight claims. “Maybe tomorrow we can reconstruct the entire Pink Floyd album.”

[ad_2]

Resource connection