[ad_1]

The key to creating adaptable device-studying products that are able of reasoning like people today do may not be feeding them oodles of instruction knowledge. Alternatively, a new examine implies, it may well appear down to how they are properly trained. These findings could be a major move toward improved, much less mistake-susceptible synthetic intelligence styles and could help illuminate the strategies of how AI systems—and humans—learn.

Individuals are master remixers. When individuals have an understanding of the relationships amid a set of factors, such as food items ingredients, we can mix them into all sorts of tasty recipes. With language, we can decipher sentences we’ve never ever encountered right before and compose complicated, initial responses due to the fact we grasp the fundamental meanings of words and phrases and the principles of grammar. In specialized terms, these two illustrations are proof of “compositionality,” or “systematic generalization”—often seen as a important theory of human cognition. “I consider that is the most vital definition of intelligence,” states Paul Smolensky, a cognitive scientist at Johns Hopkins College. “You can go from understanding about the parts to working with the complete.”

Legitimate compositionality may be central to the human head, but equipment-discovering builders have struggled for decades to verify that AI devices can achieve it. A 35-year-previous argument manufactured by the late philosophers and cognitive experts Jerry Fodor and Zenon Pylyshyn posits that the principle may well be out of get to for common neural networks. Today’s generative AI versions can mimic compositionality, creating humanlike responses to prepared prompts. Yet even the most innovative types, such as OpenAI’s GPT-3 and GPT-4, even now slide shorter of some benchmarks of this means. For instance, if you question ChatGPT a query, it might in the beginning deliver the accurate response. If you continue to ship it stick to-up queries, even so, it could possibly fail to keep on subject or get started contradicting alone. This indicates that while the types can regurgitate facts from their teaching info, they do not truly grasp the this means and intention powering the sentences they develop.

But a novel instruction protocol that is centered on shaping how neural networks learn can improve an AI model’s means to interpret information the way humans do, in accordance to a research revealed on Wednesday in Nature. The conclusions recommend that a certain tactic to AI education and learning may well develop compositional equipment discovering types that can generalize just as well as people—at minimum in some circumstances.

“This investigate breaks significant ground,” states Smolensky, who was not concerned in the research. “It accomplishes some thing that we have desired to complete and have not formerly succeeded in.”

To educate a program that would seem able of recombining parts and understanding the which means of novel, advanced expressions, researchers did not have to construct an AI from scratch. “We did not have to have to essentially transform the architecture,” says Brenden Lake, lead writer of the examine and a computational cognitive scientist at New York College. “We just experienced to give it exercise.” The scientists started out with a normal transformer model—a model that was the very same type of AI scaffolding that supports ChatGPT and Google’s Bard but lacked any prior textual content coaching. They ran that primary neural network as a result of a specially developed set of duties intended to educate the program how to interpret a created-up language.

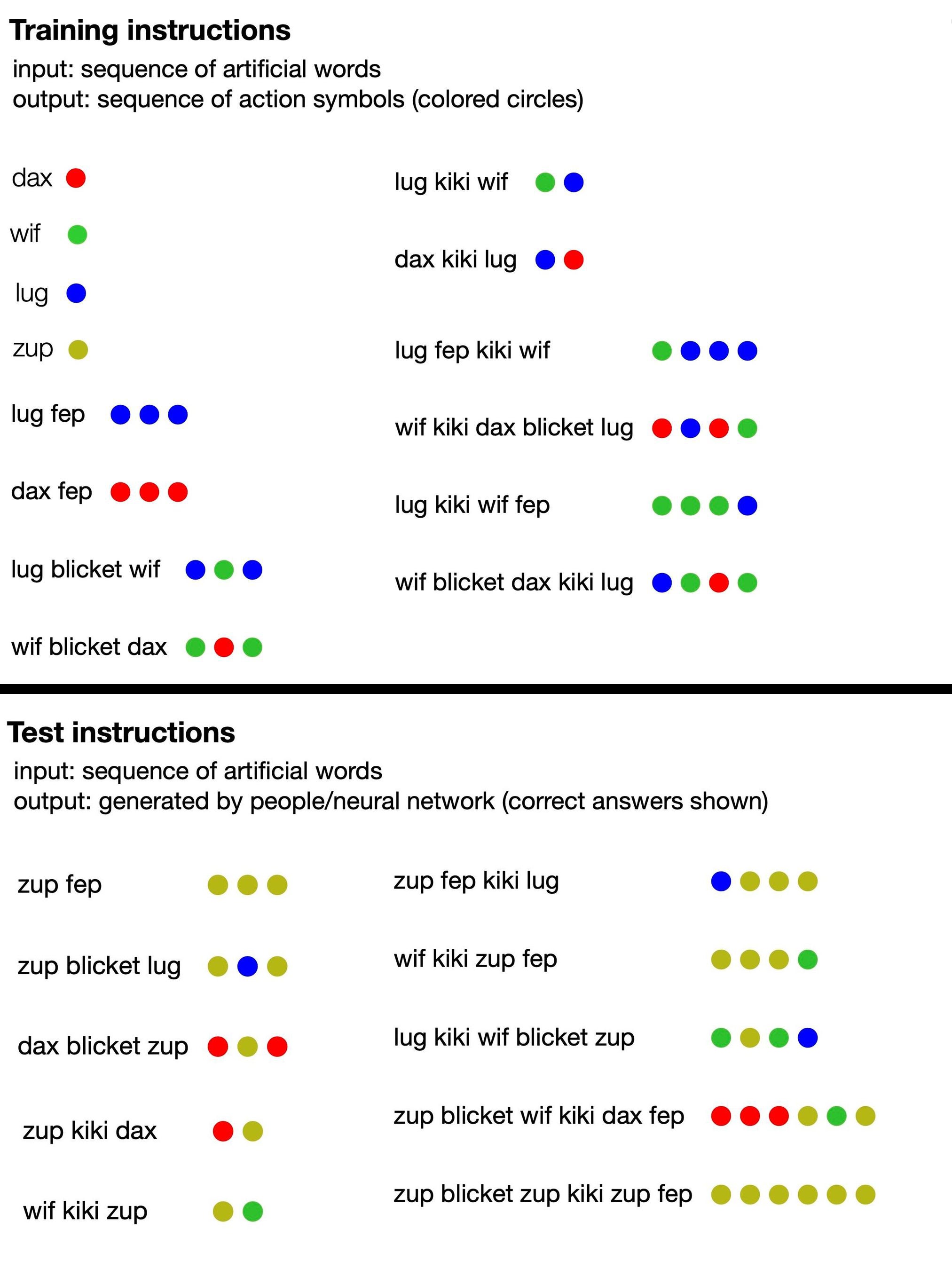

The language consisted of nonsense phrases (these as “dax,” “lug,” “kiki,” “fep” and “blicket”) that “translated” into sets of vibrant dots. Some of these invented words have been symbolic phrases that immediately represented dots of a specified color, even though others signified features that altered the get or number of dot outputs. For instance, dax represented a uncomplicated crimson dot, but fep was a operate that, when paired with dax or any other symbolic term, multiplied its corresponding dot output by three. So “dax fep” would translate into three pink dots. The AI schooling integrated none of that information, on the other hand: the scientists just fed the design a handful of illustrations of nonsense sentences paired with the corresponding sets of dots.

From there, the examine authors prompted the model to deliver its personal series of dots in response to new phrases, and they graded the AI on regardless of whether it experienced accurately adopted the language’s implied policies. Soon the neural network was ready to answer coherently, adhering to the logic of the nonsense language, even when released to new configurations of words and phrases. This suggests it could “understand” the manufactured-up rules of the language and use them to phrases it hadn’t been educated on.

Moreover, the scientists analyzed their trained AI model’s being familiar with of the produced-up language against 25 human contributors. They observed that, at its best, their optimized neural community responded 100 % correctly, even though human solutions had been right about 81 per cent of the time. (When the group fed GPT-4 the schooling prompts for the language and then requested it the examination concerns, the massive language model was only 58 per cent correct.) Given further training, the researchers’ regular transformer design began to mimic human reasoning so well that it created the very same errors: For instance, human members usually erred by assuming there was a 1-to-1 relationship amongst distinct phrases and dots, even nevertheless quite a few of the phrases didn’t abide by that pattern. When the product was fed illustrations of this conduct, it rapidly started to replicate it and made the mistake with the identical frequency as individuals did.

The model’s general performance is specially exceptional, supplied its smaller dimension. “This is not a significant language design qualified on the total World-wide-web this is a rather little transformer educated for these duties,” suggests Armando Photo voltaic-Lezama, a personal computer scientist at the Massachusetts Institute of Technological innovation, who was not involved in the new research. “It was intriguing to see that nevertheless it’s ready to exhibit these kinds of generalizations.” The discovering indicates that instead of just shoving at any time more schooling information into machine-mastering models, a complementary tactic could be to offer AI algorithms the equal of a concentrated linguistics or algebra class.

Photo voltaic-Lezama suggests this education system could theoretically supply an alternate route to superior AI. “Once you have fed a design the complete Online, there’s no 2nd World-wide-web to feed it to further improve. So I consider techniques that power models to motive improved, even in artificial duties, could have an affect going ahead,” he says—with the caveat that there could be problems to scaling up the new education protocol. At the same time, Solar-Lezama believes these types of scientific tests of lesser styles support us greater fully grasp the “black box” of neural networks and could shed gentle on the so-referred to as emergent talents of more substantial AI units.

Smolensky provides that this examine, together with comparable get the job done in the future, could possibly also raise humans’ being familiar with of our individual head. That could help us layout methods that minimize our species’ mistake-prone tendencies.

In the current, even so, these positive aspects continue being hypothetical—and there are a few of significant limitations. “Despite its successes, their algorithm doesn’t remedy just about every obstacle elevated,” claims Ruslan Salakhutdinov, a laptop scientist at Carnegie Mellon University, who was not concerned in the review. “It doesn’t quickly cope with unpracticed sorts of generalization.” In other text, the training protocol aided the design excel in a single kind of endeavor: finding out the designs in a faux language. But supplied a whole new undertaking, it could not utilize the identical skill. This was obvious in benchmark exams, the place the design failed to manage longer sequences and could not grasp earlier unintroduced “words.”

And crucially, each specialist Scientific American spoke with noted that a neural community capable of restricted generalization is pretty diverse from the holy grail of synthetic common intelligence, whereby laptop styles surpass human ability in most duties. You could argue that “it’s a quite, incredibly, very modest move in that route,” Photo voltaic-Lezama claims. “But we’re not speaking about an AI buying capabilities by alone.”

From confined interactions with AI chatbots, which can current an illusion of hypercompetency, and abundant circulating buzz, quite a few people may possibly have inflated thoughts of neural networks’ powers. “Some individuals might discover it surprising that these kinds of linguistic generalization duties are really difficult for units like GPT-4 to do out of the box,” Solar-Lezama suggests. The new study’s findings, however remarkable, could inadvertently serve as a actuality test. “It’s truly essential to hold monitor of what these systems are capable of executing,” he says, “but also of what they can not.”

[ad_2]

Supply website link