[ad_1]

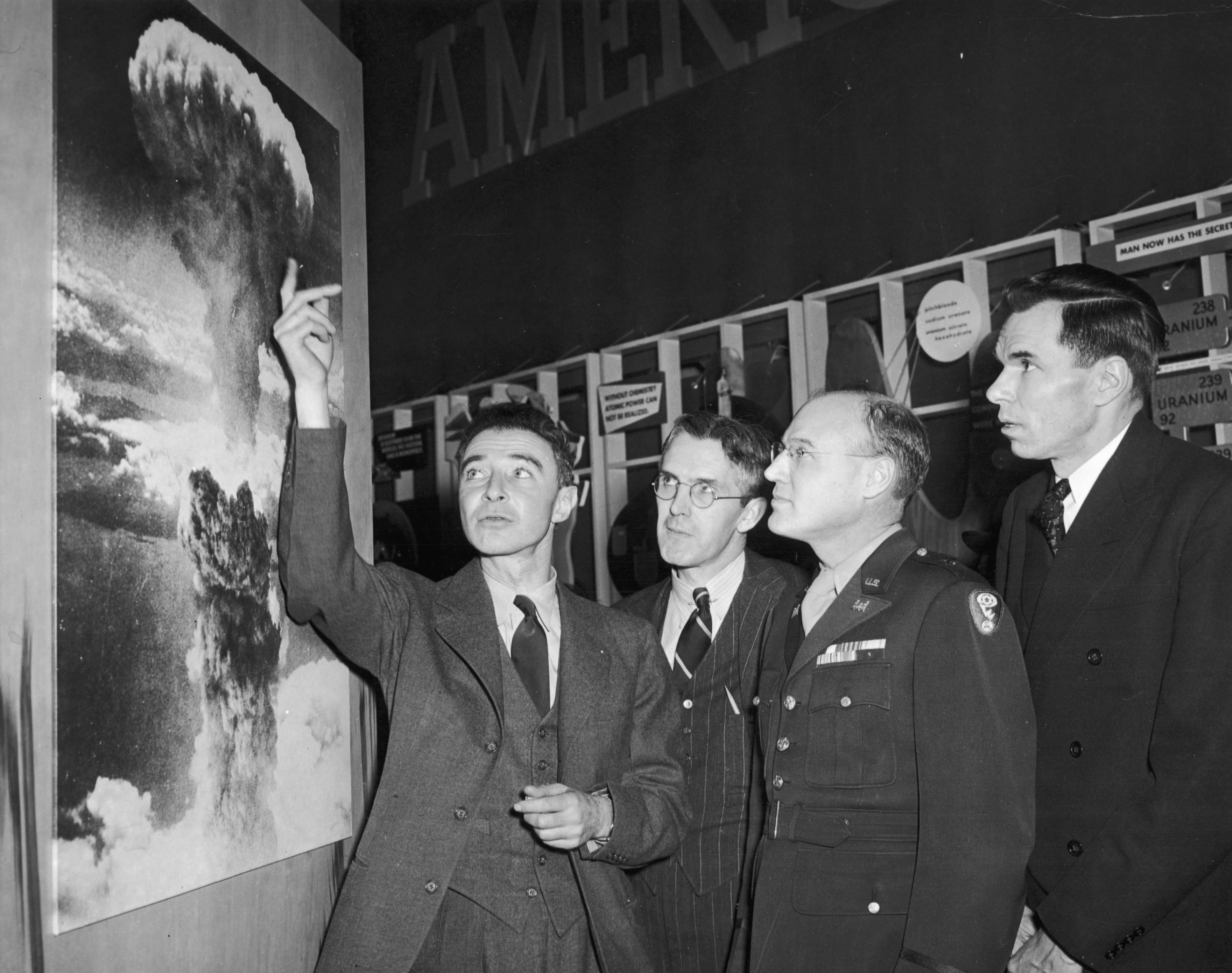

Eighty-one particular decades in the past, President Franklin D. Roosevelt tasked the youthful physicist J. Robert Oppenheimer with environment up a top secret laboratory in Los Alamos, N.M. Alongside with his colleagues, Oppenheimer was tasked with producing the world’s very first nuclear weapons less than the code name the Manhattan Task. A lot less than a few decades later on, they succeeded. In 1945 the U.S. dropped these weapons on the citizens of the Japanese towns of Hiroshima and Nagasaki, killing hundreds of thousands of persons.

Oppenheimer turned acknowledged as “the father of the atomic bomb.” Regardless of his misplaced fulfillment with his wartime support and technological accomplishment, he also became vocal about the have to have to consist of this unsafe technology.

But the U.S. didn’t heed his warnings, and geopolitical worry in its place gained the working day. The nation raced to deploy at any time additional impressive nuclear programs with scant recognition of the immense and disproportionate damage these weapons would bring about. Officials also overlooked Oppenheimer’s phone calls for bigger international collaboration to regulate nuclear engineering.

Oppenheimer’s case in point holds lessons for us today, far too. We will have to not make the same blunder with artificial intelligence as we produced with nuclear weapons.

We are even now in the early levels of a promised artificial intelligence revolution. Tech providers are racing to make and deploy AI-driven substantial language models, this kind of as ChatGPT. Regulators require to hold up.

Although AI promises immense rewards, it has previously uncovered its prospective for damage and abuse. Previously this 12 months the U.S. surgeon general introduced a report on the youth mental wellbeing crisis. It found that one particular in a few teenage ladies considered suicide in 2021. The information are unequivocal: massive tech is a huge portion of the problem. AI will only amplify that manipulation and exploitation. The general performance of AI rests on exploitative labor methods, both domestically and internationally. And large, opaque AI models that are fed problematic data generally exacerbate present biases in society—influencing everything from criminal sentencing and policing to health and fitness care, lending, housing and employing. In addition, the environmental impacts of running this sort of power-hungry AI versions stress now fragile ecosystems reeling from the impacts of local climate modify.

AI also promises to make likely perilous technology a lot more obtainable to rogue actors. Last year researchers requested a generative AI design to design new chemical weapons. It developed 40,000 opportunity weapons in six hrs. An before version of ChatGPT created bomb-generating recommendations. And a class exercise at the Massachusetts Institute of Technology recently shown how AI can assistance create synthetic pathogens, which could most likely ignite the upcoming pandemic. By spreading obtain to such perilous information, AI threatens to turn into the computerized equal of an assault weapon or a superior-potential journal: a car for a person rogue human being to unleash devastating hurt at a magnitude under no circumstances noticed just before.

Still businesses and personal actors are not the only types racing to deploy untested AI. We need to also be cautious of governments pushing to militarize AI. We now have a nuclear start technique precariously perched on mutually assured destruction that offers earth leaders just a couple minutes to determine regardless of whether to launch nuclear weapons in the scenario of a perceived incoming assault. AI-run automation of nuclear start methods could shortly get rid of the follow of having a “human in the loop”—a necessary safeguard to ensure defective computerized intelligence doesn’t guide to nuclear war, which has occur shut to taking place many situations now. A army automation race, designed to give selection-makers bigger capacity to react in an significantly complex globe, could direct to conflicts spiraling out of manage. If nations rush to adopt militarized AI technological know-how, we will all shed.

As in the 1940s, there is a significant window to condition the progress of this emerging and potentially risky technologies. Oppenheimer acknowledged that the U.S. should really function with even its deepest antagonists to internationally manage the perilous aspect of nuclear technology—while even now pursuing its tranquil utilizes. Castigating the man and the idea, the U.S. as a substitute kicked off a extensive chilly war arms race by building hydrogen bombs, along with linked highly-priced and often weird delivery techniques and atmospheric tests. The resultant nuclear-industrial complicated disproportionately harmed the most susceptible. Uranium mining and atmospheric screening brought on cancer amid teams that involved inhabitants of New Mexico, Marshallese communities and associates of the Navajo Country. The wasteful expending, possibility charge and affect on marginalized communities were being incalculable—to say nothing of the numerous near phone calls and the proliferation of nuclear weapons that ensued. Now we require both equally intercontinental cooperation and domestic regulation to make sure that AI develops safely and securely.

Congress will have to act now to control tech corporations to be certain that they prioritize the collective community fascination. Congress really should begin by passing my Children’s On the net Privateness Safety Act, my Algorithmic Justice and Online Transparency Act and my bill prohibiting the start of nuclear weapons by AI. But that’s just the commencing. Guided by the White House’s Blueprint for an AI Invoice of Rights, Congress requires to pass wide laws to cease this reckless race to construct and deploy unsafe synthetic intelligence. Selections about how and the place to use AI are unable to be still left to tech businesses on your own. They need to be produced by centering on the communities most vulnerable to exploitation and harm from AI. And we will have to be open up to doing work with allies and adversaries alike to prevent the two armed forces and civilian abuses of AI.

At the commence of the nuclear age, alternatively than heed Oppenheimer’s warning on the risks of an arms race, the U.S. fired the setting up gun. 8 many years later, we have a ethical responsibility and a distinct curiosity in not repeating that slip-up.

[ad_2]

Source url